Sharding is a process of splitting up the large scale of data sets into a chunk of smaller data sets across multiple MongoDB instances in a distributed environment.

What is Sharding?

MongoDB sharding provides us scalable solution to store a large amount of data among the number of servers rather than storing on a single server.

In practical terms, it is not feasible to store exponentially growing data on a single machine. Querying a huge amount of data stored on a single server could lead to high resource utilization and may not provide satisfactory read and write throughput.

Basically, there are two types of scaling methods that exist to undertake growing data with the system:

- Vertical

- Horizontal

Vertical Scaling works with enhancing single server performance by adding more powerful processors, upgrading RAM, or adding more disk space to the system. But there are the possible implications of applying vertical scaling in practical use cases with existing technology and hardware configurations.

Horizontal Scaling works with adding more servers and distribute the load on multiple servers. Since each machine will be handling the subset of the whole dataset, it provides better efficiency and cost-effective solution rather than deploying the high-end hardware. But it requires additional maintenance of complex infrastructure with a large number of servers.

Mongo DB sharding works on the horizontal scaling technique.

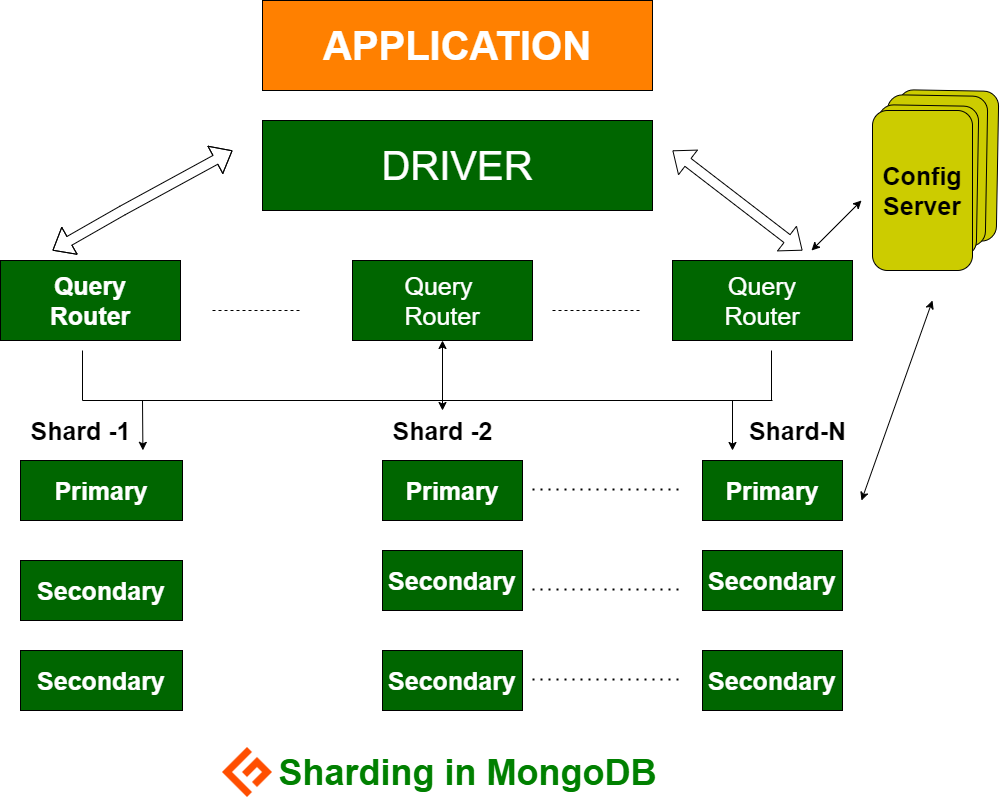

Sharding components

To achieve sharding in MongoDB, the following components are required:

Shard is a Mongo instance to handle a subset of original data. Shards are required to be deployed in the replica set.

Mongos is a Mongo instance and acts as an interface between a client application and a sharded cluster. It works as a query router to shards.

Config Server is a Mongo instance which stores metadata information and configuration details of cluster. MongoDB requires the config server to be deployed as a replica set.

Sharding Architecture

MongoDB cluster consists of a number of replica sets.

Each replica set consists of a minimum of 3 or more mongo instances. A sharded cluster may consist of multiple mongo shards instances, and each shard instance works within a shard replica set. The application interacts with Mongos, which in turn communicates with shards. Therefore in Sharding, applications never interact directly with shard nodes. The query router distributes the subsets of data among shards nodes based upon the shard key.

Sharding Implementation

Follow the below steps for sharding

Step 1

- Start config server in the replica set and enable replication between them.

mongod --configsvr --port 27019 --replSet rs0 --dbpath C:datadata1 --bind_ip localhost

mongod --configsvr --port 27018 --replSet rs0 --dbpath C:datadata2 --bind_ip localhost

mongod --configsvr --port 27017 --replSet rs0 --dbpath C:datadata3 --bind_ip localhost

Step 2

- Initialize replica set on one of the config servers.

rs.initiate( { _id : "rs0", configsvr: true, members: [ { _id: 0, host: "IP:27017" }, { _id: 1, host: "IP:27018" }, { _id: 2, host: "IP:27019" } ] })

rs.initiate( { _id : "rs0", configsvr: true, members: [ { _id: 0, host: "IP:27017" }, { _id: 1, host: "IP:27018" }, { _id: 2, host: "IP:27019" } ] })

{

"ok" : 1,

"$gleStats" : {

"lastOpTime" : Timestamp(1593569257, 1),

"electionId" : ObjectId("000000000000000000000000")

},

"lastCommittedOpTime" : Timestamp(0, 0),

"$clusterTime" : {

"clusterTime" : Timestamp(1593569257, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1593569257, 1)

}Step 3

- Start sharding servers in the replica set and enable replication between them.

mongod --shardsvr --port 27020 --replSet rs1 --dbpath C:datadata4 --bind_ip localhost

mongod --shardsvr --port 27021 --replSet rs1 --dbpath C:datadata5 --bind_ip localhost

mongod --shardsvr --port 27022 --replSet rs1 --dbpath C:datadata6 --bind_ip localhost

MongoDB initializes the first sharding server as Primary, to move the primary sharding server use movePrimary method.

Step 4

- Initialize replica set on one of the sharded servers.

rs.initiate( { _id : "rs0", members: [ { _id: 0, host: "IP:27020" }, { _id: 1, host: "IP:27021" }, { _id: 2, host: "IP:27022" } ] })

rs.initiate( { _id : "rs0", members: [ { _id: 0, host: "IP:27020" }, { _id: 1, host: "IP:27021" }, { _id: 2, host: "IP:27022" } ] })

{

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1593569748, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1593569748, 1)

}Step 5

- Start the mangos for the sharded cluster

mongos --port 40000 --configdb rs0/localhost:27019,localhost:27018, localhost:27017

Step 6

- Connect the mongo route server

mongo --port 40000

- Now, add sharding servers.

sh.addShard( "rs1/localhost:27020,localhost:27021,localhost:27022")

sh.addShard( "rs1/localhost:27020,localhost:27021,localhost:27022")

{

"shardAdded" : "rs1",

"ok" : 1,

"operationTime" : Timestamp(1593570212, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1593570212, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}Step 7

- On mongo shell enable sharding on DB and collections.

- Enable sharding on DB

sh.enableSharding("geekFlareDB")

sh.enableSharding("geekFlareDB")

{

"ok" : 1,

"operationTime" : Timestamp(1591630612, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1591630612, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}Step 8

- To shard the collection shard key (described later in this article) is required.

Syntax: sh.shardCollection("dbName.collectionName", { "key" : 1 } )

sh.shardCollection("geekFlareDB.geekFlareCollection", { "key" : 1 } )

{

"collectionsharded" : "geekFlareDB.geekFlareCollection",

"collectionUUID" : UUID("0d024925-e46c-472a-bf1a-13a8967e97c1"),

"ok" : 1,

"operationTime" : Timestamp(1593570389, 3),

"$clusterTime" : {

"clusterTime" : Timestamp(1593570389, 3),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}Note if the collection doesn’t exist, create as follows.

db.createCollection("geekFlareCollection")

{

"ok" : 1,

"operationTime" : Timestamp(1593570344, 4),

"$clusterTime" : {

"clusterTime" : Timestamp(1593570344, 5),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}Step 9

Insert data into the collection. Mongo logs will start growing, and indicating a balancer is in action and trying to balance the data among shards.

Step 10

The last step is to check the status of the sharding. Status can be checked by running below command on the Mongos route node.

Sharding status

Check sharding status by running below command on the mongo route node.

sh.status()

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5ede66c22c3262378c706d21")

}

shards:

{ "_id" : "rs1", "host" : "rs1/localhost:27020,localhost:27021,localhost:27022", "state" : 1 }

active mongoses:

"4.2.7" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 5

Last reported error: Could not find host matching read preference { mode: "primary" } for set rs1

Time of Reported error: Tue Jun 09 2020 15:25:03 GMT 0530 (India Standard Time)

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

rs1 1024

too many chunks to print, use verbose if you want to force print

{ "_id" : "geekFlareDB", "primary" : "rs1", "partitioned" : true, "version" : { "uuid" : UUID("a770da01-1900-401e-9f34-35ce595a5d54"), "lastMod" : 1 } }

geekFlareDB.geekFlareCol

shard key: { "key" : 1 }

unique: false

balancing: true

chunks:

rs1 1

{ "key" : { "$minKey" : 1 } } -->> { "key" : { "$maxKey" : 1 } } on : rs1 Timestamp(1, 0)

geekFlareDB.geekFlareCollection

shard key: { "product" : 1 }

unique: false

balancing: true

chunks:

rs1 1

{ "product" : { "$minKey" : 1 } } -->> { "product" : { "$maxKey" : 1 } } on : rs1 Timestamp(1, 0)

{ "_id" : "test", "primary" : "rs1", "partitioned" : false, "version" : { "uuid" : UUID("fbc00f03-b5b5-4d13-9d09-259d7fdb7289"), "lastMod" : 1 } }

mongos>Data distribution

The Mongos router distributes the load among shards based upon the shard key, and to evenly distribute data; balancer comes into action.

The key component to distribute data among shards are

- A balancer plays a role in balancing the subset of data among the sharded nodes. Balancer runs when Mongos server starts distributing loads among shards. Once started, Balancer distributed data more evenly. To check the state of balancer run

sh.status()orsh.getBalancerState()orsh.isBalancerRunning()

mongos> sh.isBalancerRunning()

true

mongos>OR

mongos> sh.getBalancerState()

true

mongos>After inserting the data, we could notice some activity in the Mongos daemon stating that it is moving some chunks for the specific shards and so on, i.e., the balancer will be in action trying to balance the data across the shards. Running balancer could lead to performance issues; hence it is suggested to run the balancer within a certain balancer window.

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5efbeff98a8bbb2d27231674")

}

shards:

{ "_id" : "rs1", "host" : "rs1/127.0.0.1:27020,127.0.0.1:27021,127.0.0.1:27022", "state" : 1 }

{ "_id" : "rs2", "host" : "rs2/127.0.0.1:27023,127.0.0.1:27024,127.0.0.1:27025", "state" : 1 }

active mongoses:

"4.2.7" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: yes

Failed balancer rounds in last 5 attempts: 5

Last reported error: Could not find host matching read preference { mode: "primary" } for set rs2

Time of Reported error: Wed Jul 01 2020 14:39:59 GMT 0530 (India Standard Time)

Migration Results for the last 24 hours:

1024 : Success

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

rs2 1024

too many chunks to print, use verbose if you want to force print

{ "_id" : "geekFlareDB", "primary" : "rs2", "partitioned" : true, "version" : { "uuid" : UUID("a8b8dc5c-85b0-4481-bda1-00e53f6f35cd"), "lastMod" : 1 } }

geekFlareDB.geekFlareCollection

shard key: { "key" : 1 }

unique: false

balancing: true

chunks:

rs2 1

{ "key" : { "$minKey" : 1 } } -->> { "key" : { "$maxKey" : 1 } } on : rs2 Timestamp(1, 0)

{ "_id" : "test", "primary" : "rs2", "partitioned" : false, "version" : { "uuid" : UUID("a28d7504-1596-460e-9e09-0bdc6450028f"), "lastMod" : 1 } }

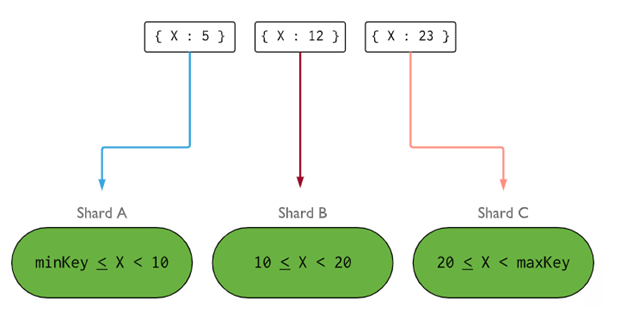

mongos>- Shard Key determines the logic to distribute documents of sharded collection among the shards. Shard key can be an indexed field or indexed compound field which is required to be present in all documents of the collection to be inserted. Data will be partitioned into chunks, and each chunk will be associated with the range based shard key. On the basis of the range query router will decide which shard will store the chunk.

Shard Key can be selected by considering five properties :

- Cardinality

- Write distribution

- Read distribution

- Read targeting

- Read locality

An ideal shard key makes MongoDB to evenly distribute the load among all shard. Choosing a good shard key is extremely important.

Removing the shard node

Before removing shards from the cluster, the user is required to ensure the safe migration of data to remaining shards. MongoDB takes care of safely draining data to other shards nodes before the removal of the required shard node.

Run below command to remove the required shard.

Step 1

First, we need to determine the hostname of the shard to be removed. Below command will list all the shards present in the cluster along with the state of the shard.

db.adminCommand( { listShards: 1 } )

mongos> db.adminCommand( { listShards: 1 } )

{

"shards" : [

{

"_id" : "rs1",

"host" : "rs1/127.0.0.1:27020,127.0.0.1:27021,127.0.0.1:27022",

"state" : 1

},

{

"_id" : "rs2",

"host" : "rs2/127.0.0.1:27023,127.0.0.1:27024,127.0.0.1:27025",

"state" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1593572866, 15),

"$clusterTime" : {

"clusterTime" : Timestamp(1593572866, 15),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}Step 2

Issue the below command to remove the required shard from the cluster. Once issued, the balancer takes care of the removal of chunks from draining shard node and then balances the distribution of remaining chunks among the rest shards nodes.

db.adminCommand( { removeShard: "shardedReplicaNodes" } )

mongos> db.adminCommand( { removeShard: "rs1/127.0.0.1:27020,127.0.0.1:27021,127.0.0.1:27022" } )

{

"msg" : "draining started successfully",

"state" : "started",

"shard" : "rs1",

"note" : "you need to drop or movePrimary these databases",

"dbsToMove" : [ ],

"ok" : 1,

"operationTime" : Timestamp(1593572385, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1593572385, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}Step 3

To check the status of the draining shard, issue the same command again.

db.adminCommand( { removeShard: "rs1/127.0.0.1:27020,127.0.0.1:27021,127.0.0.1:27022" } )

We need to wait until the draining of the data is completed. msg and state fields will show if draining of data has been completed or not, as follows

"msg" : "draining ongoing",

"state" : "ongoing",We can also check the status with the command sh.status(). Once removed sharded node will not be reflected in the output. But if draining will be ongoing, the sharded node will come with draining status as true.

Step 4

Keep on checking the status of draining with the same above command, until the required shard is removed completely.

Once the completed, the output of the command will reflect the message and state as completed.

"msg" : "removeshard completed successfully",

"state" : "completed",

"shard" : "rs1",

"ok" : 1,Step 5

Finally, we need to check the remaining shards in the cluster. To check the status enter sh.status() or db.adminCommand( { listShards: 1 } )

mongos> db.adminCommand( { listShards: 1 } )

{

"shards" : [

{

"_id" : "rs2",

"host" : "rs2/127.0.0.1:27023,127.0.0.1:27024,127.0.0.1:27025",

"state" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1593575215, 3),

"$clusterTime" : {

"clusterTime" : Timestamp(1593575215, 3),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}Here, we can see the removed shard is no longer present in the list of shards.

Benefits of Sharding over Replication

- In replication, the primary node handles all write operations, whereas secondary servers are required to maintain backup copies or serve read-only operations. But in sharding along with replica sets, the load gets distributed among numbers of servers.

- A single replica set is limited to 12 nodes, but there is no restriction on the number of shards.

- Replication requires high-end hardware or verticle scaling for handling large datasets, which is too expensive compared to adding additional servers in sharding.

- In replication, read performance can be enhanced by adding more slave/secondary servers, whereas, in sharding, both read and write performance will be enhanced by adding more shards nodes.

Sharding limitation

- The Sharded cluster doesn’t support unique indexing across the shards until the unique index is prefixed with full shard key.

- All update operations for sharded collection either on one or many documents must contain the sharded key or _id field in the query.

- Collections can be sharded if their size doesn’t exceed the specified threshold. This threshold can be estimated on the basis of the average size of all shard keys and configured sized of chunks.

- Sharding comprises of operational limits on max collection size or number of splits.

- Choosing the wrong shard keys to lead to performance implications.

Conclusion

MongoDB offers built-in sharding to implement a large database without compromising the performance. I hope the above helps you to setup MongoDB sharding. Next, you may want to get familiar with some of the commonly used MongoDB commands.