In today’s fast-paced digital world, businesses need to be able to handle large amounts of web traffic to stay competitive. One way to achieve this is by using a high-performance web server like Nginx. Configuring Nginx to handle 100,000 requests per minute requires a well-optimized and tuned server.

In this article, we will provide some guidelines to help you configure Nginx to handle such a high volume of requests. Here are some steps to help you configure Nginx to handle such a high volume of requests:

Step 1: Increase the number of worker processes

The worker processes handle the incoming requests, so increasing the number of worker processes can help improve the server’s ability to handle a large number of requests. The number of worker processes can be increased by adding the following line to the nginx.conf file:

This will create 8 worker processes to handle the incoming requests.

Step 2: Tune worker connections

The worker connections setting controls the maximum number of connections that can be handled by each worker process. This setting can be adjusted by adding the following line to the nginx.conf file:

This will set the maximum number of connections per worker process to 1024.

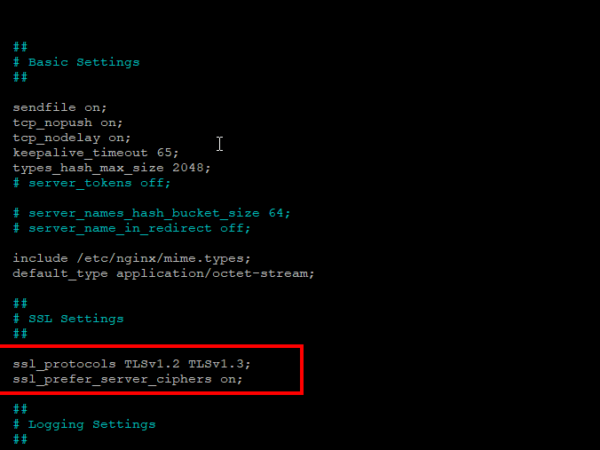

Step 3: Configure keepalive connections

Keepalive connections allow multiple requests to be sent over the same TCP connection, reducing the overhead of creating a new connection for each request. This can be configured by adding the following lines to the nginx.conf file:

|

keepalive_timeout 65; keepalive_requests 100000; |

This will set the timeout for keepalive connections to 65 seconds and allow up to 100,000 requests to be sent over the same connection.

Step 4: Optimize caching

Caching can help reduce the load on the server by serving frequently requested content from cache instead of generating it for each request. Nginx can be configured to cache content by adding the following lines to the nginx.conf file:

|

proxy_cache_path /var/cache/nginx levels=1:2 keys_zone=my_cache:10m inactive=60m; proxy_cache_key “$scheme$request_method$host$request_uri”; proxy_cache_valid 200 60m; |

This will create a cache directory, set the cache key to include the request method and URI, and cache responses with a 200 status code for 60 minutes.

Step 5: Configure load balancing

Load balancing can distribute incoming requests across multiple servers to help handle a high volume of requests. Nginx can be configured to load balance by adding the following lines to the nginx.conf file:

|

upstream backend { server backend1.example.com; server backend2.example.com; } server { listen 80; location / { proxy_pass http://backend; } } |

This will define an upstream group of servers, and then proxy requests to the upstream group in the server block.

By following these steps, you can configure Nginx to handle 100,000 requests per minute. However, it’s important to note that these settings may need to be adjusted based on your specific server setup and traffic patterns. Regular monitoring and tuning can help ensure that your server is performing optimally.

Common Bottlenecks that can Limit Nginx’s Performance

There are several potential bottlenecks that can prevent you from achieving the goal of configuring Nginx to handle 100,000 requests per minute:

- CPU: Nginx is a CPU-bound application, meaning that it relies heavily on the processor to handle requests. If the CPU is unable to keep up with the demand, it can become a bottleneck and limit the amount of traffic Nginx can handle.

- Memory: Nginx uses a small amount of memory per connection, but when handling a large number of connections, memory usage can add up quickly. If the server runs out of memory, it can slow down or crash, leading to a bottleneck.

- Disk I/O: Nginx relies on disk I/O to serve static files or log requests. If the disk I/O subsystem is slow or overloaded, it can become a bottleneck and limit Nginx’s performance.

- Network I/O: Nginx communicates with clients and upstream servers over the network. If the network interface becomes a bottleneck, it can limit the amount of traffic Nginx can handle.

- Upstream servers: If Nginx is proxying requests to upstream servers (such as a web application or database server), those servers can become a bottleneck if they are unable to keep up with the demand.

- Application code: If the application code being served by Nginx has performance issues, it can limit the amount of traffic that Nginx can handle. For example, if the application has a slow database query, it can slow down the entire request/response cycle.

To achieve the goal of handling 100,000 requests per minute, it’s important to identify and address any potential bottlenecks. This may involve upgrading hardware, optimizing Nginx configuration, tuning the operating system, optimizing application code, and scaling out horizontally to multiple servers.

Conclusion

Nginx is a powerful web server that can handle a large amount of traffic with the right configuration. By increasing the number of worker processes, tuning worker connections, configuring keepalive connections, optimizing caching, and load balancing, you can configure Nginx to handle 100,000 requests per minute. It is important to note that these settings may need to be adjusted based on your specific server setup and traffic patterns. Regular monitoring and tuning can help ensure that your server is performing optimally and handling your traffic needs.