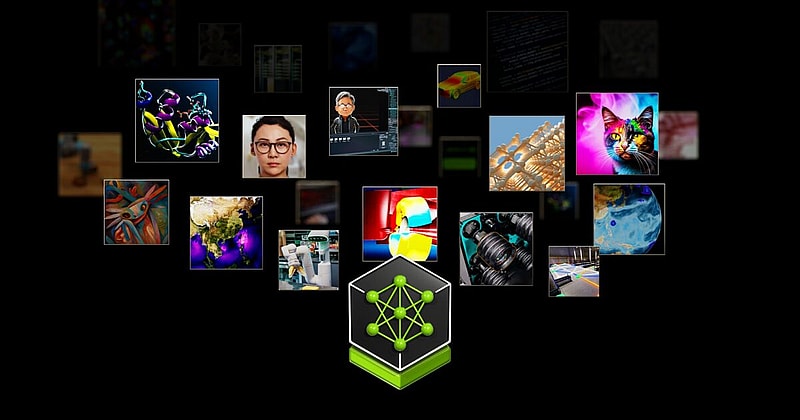

NVIDIA has launched dozens of enterprise-grade generative AI microservices that businesses can use to create and deploy custom applications on their own platforms while retaining full ownership and control of their intellectual property.

Built on top of the NVIDIA CUDA® platform, the catalog of cloud-native microservices includes NVIDIA NIM microservices for optimized inference on more than two dozen popular AI models from NVIDIA and its partner ecosystem.

In addition, NVIDIA accelerated software development kits, libraries and tools can now be accessed as NVIDIA CUDA-X™ microservices for retrieval-augmented generation (RAG), guardrails, data processing, HPC and more.

The curated selection of microservices adds a new layer to NVIDIA’s full-stack computing platform. This layer connects the AI ecosystem of model developers, platform providers and enterprises with a standardized path to run custom AI models optimized for NVIDIA’s CUDA installed base of hundreds of millions of GPUs across clouds, data centers, workstations and PCs.

Among the first to access the new NVIDIA generative AI microservices include companies such as Adobe, Cadence, CrowdStrike, Getty Images, SAP, ServiceNow, and Shutterstock.

“Established enterprise platforms are sitting on a goldmine of data that can be transformed into generative AI copilots,” said Jensen Huang, founder and CEO of NVIDIA. “Created with our partner ecosystem, these containerized AI microservices are the building blocks for enterprises in every industry to become AI companies.”

Reducing deployment times from “weeks to minutes”

NIM microservices provide pre-built containers powered by NVIDIA inference software, which enable developers to reduce deployment times from weeks to minutes.

It provides the “fastest and highest-performing” production AI container for deploying models from NVIDIA, A121, Adept, Cohere, Getty Images, and Shutterstock as well as open models from Google, Hugging Face, Meta, Microsoft, Mistral AI and Stability AI.

ServiceNow also announced that it is using NIM to develop and deploy new domain-specific copilots and other generative AI applications faster and more cost effectively.

Customers will be able to access NIM microservices from Amazon SageMaker, Google Kubernetes Engine and Microsoft Azure AI, and integrate with popular AI frameworks like Deepset, LangChain and LlamaIndex.

Generative AI for enterprises

In addition to leading application providers, data, infrastructure and compute platform providers across the NVIDIA ecosystem are working with NVIDIA microservices to bring generative AI to enterprises.

Enterprises can deploy NVIDIA microservices included with NVIDIA AI Enterprise 5.0 across the infrastructure of their choice, including clouds Amazon Web Services (AWS), Google Cloud, Azure and Oracle Cloud Infrastructure.

Developers will be able to experiment with NVIDIA microservices at no charge, while enterprises can deploy production-grade NIM microservices with NVIDIA AI Enterprise 5.0 running on NVIDIA-Certified Systems and cloud platforms.

-

Shruti Khairnar is a seasoned B2B reporter with a diverse background in financial journalism. She has written for prominent B2B publications including FinTech Futures, ESG Investor, Sustainabonds and more.