In this tutorial, we will look at how we can configure Nginx web server for a production environment.

A web server in a production environment is different from a web server in a test environment in terms of performance, security and so on.

By default, there is always a ready-to-use configuration setting for an Nginx web server once you have successfully installed it. However, the default configuration is not good enough for a production environment. Therefore we will focus on how to configure Nginx to perform better during heavy and normal traffic spike, and how to secure it from users who intend to abuse it.

If you have not installed Nginx on your machine, you can check how to do so here. It shows you how to install Nginx on a Unix platform. Choose to install Nginx via the source files because the pre-built Nginx do not come with some of the modules used in this tutorial.

Requirements

You need to have the following installed on your machine and make sure you run this tutorial on any Debian-based platform such as Ubuntu.

- Ubuntu or any other Debian-based platform

- wget

- Vim (text editor)

Also, you need to run or execute some commands in this tutorial as a root user via the sudo command.

Understanding Nginx Config Structure

In this section we will look at the following:

- Structure of Nginx

- Sections such as an event, HTTP, and mail

- Valid syntax of Nginx

At the end of this section, you will understand the structure of Nginx configuration, the purpose or roles of sections as well as how to define valid directives inside sections.

The complete Nginx configuration file has a logical structure that is composed of directives grouped into a number of sections such as the event section, http section, mail section and so on.

The primary configuration file is located at /etc/nginx/nginx.conf whilst other configuration files are located at /etc/nginx.

Main Context

This section or context contain directives outside specific sections such as the mail section.

Any other directives such as user nginx; , worker_processes 1; , error_log /var/log/nginx/error.log warn; and pid /var/run/nginx.pid can be placed within the main section or context.

But some of these directives such as the worker_processes can also exist in the event section.

Sections

Sections in Nginx defines the configuration for Nginx modules.

For instance, the http section defines the configuration for the ngx_http_core module, the event section defines the configuration for the ngx_event_module whilst the mail section defines the configuration for the ngx_mail_module.

You can check here for a complete list of sections in Nginx.

Directives

Directives in Nginx are made up of a variable name and a number of arguments such as the following:

The worker_processes is a variable name whilst the auto serves as an argument.

worker_processes auto;Directives end with a semi-colon as shown above.

Finally, the Nginx configuration file must adhere to a particular set of rules. The following are the valid syntax of Nginx configuration:

- Valid directives begin with a variable name then followed by one or more arguments

- All valid directives end with a semi-colon

; - Sections are defined with curly braces

{} - A section can be embedded in another section

- Configuration outside any section is part of the Nginx global configuration.

- The lines starting with the hash sign

#are comments.

Tuning Nginx for Performance

In this section, we will configure Nginx to perform better during heavy traffic and traffic spike.

We will look at how to configure:

- Workers

- Disk I/O activity

- Network activity

- Buffers

- Compression

- Caching

- Timeout

Still, inside the activated virtual environment, type the following commands to change to the Nginx directory and list its content.

cd nginx && lsSearch for the folder conf. Inside this folder is the nginx.conf file.

We will make use of this file to configure Nginx

Now execute the following commands to navigate to the conf folder and open the file nginx.conf with the vim editor

cd conf

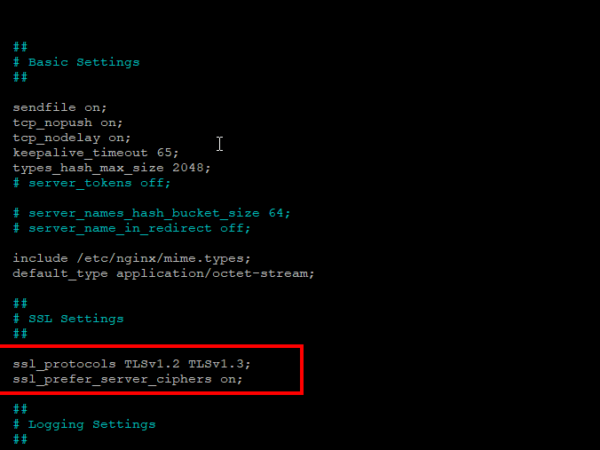

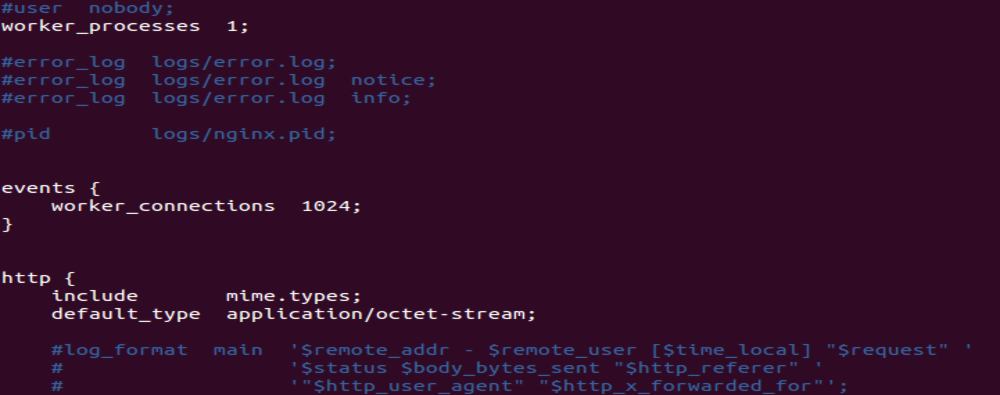

sudo vim nginx.confBelow is a screenshot of how the nginx.conf file looks like by default.

Workers

To enable Nginx to perform better, we need to configure workers in the events section. Configuring Nginx workers enables you to process connections from clients effectively.

Assuming you have not closed the vim editor, press on the i button on the keyboard to edit the nginx.conf file.

Copy and paste the following inside the events section as shown below:

events {

worker_processes auto;

worker_connections 1024;

worker_rlimit_nofile 20960;

multi_accept on;

mutex_accept on;

mutex_accept_delay 500ms;

use epoll;

epoll_events 512;

}worker_processes: This directive controls the number of workers in Nginx. The value of this directive is set to auto to allow Nginx to determine the number of cores available, disks, server load, and network subsystem. However, you can discover the number of cores by executing the command lscpu on the terminal.

worker_connections: This directive sets the value of the number of simultaneous connection that can be opened by a worker. The default value is 512 but we set it to 1,024 to allow one worker to accept a much simultaneous connection from a client.

worker_rlimit_nofile: This directive is somehow related to worker_connections. In order to handle large simultaneous connection, we set it to a large value.

multi_accept: This directive allows a worker to accept many connections in the queue at a time. A queue in this context simply means a sequence of data objects waiting to be processed.

mutex_accept: This directive is turned off by default. But because we have configured many workers in Nginx, we need to turn it on as shown in the code above to allow workers to accept new connections one by one.

mutex_accept_delay: This directive determines how long a worker should wait before accepting a new connection. Once the accept_mutex is turned on, a mutex lock is assigned to a worker for a timeframe specified by the accept_mutex_delay . When the timeframe is up, the next worker in line is ready to accept new connections.

use: This directive specifies the method to process a connection from the client. In this tutorial, we decided to set the value to epoll because we are working on a Ubuntu platform. The epoll method is the most effective processing method for Linux platforms.

epoll_events: The value of this directive specifies the number of events Nginx will transfer to the kernel.

Disk I/O

In this section, we will configure asynchronous I/O activity in Nginx to allow it to perform effective data transfer and improve cache effectiveness.

Disk I/O simply refers to write and read operations between the hard disk and RAM. We will make use of sendfile() function inside the kernel to send small files.

You can make use of the http section, location section and server section for directives in this area.

The location section, server section can be embedded or placed within the http section to make the configuration readable.

Copy and paste the following code inside the location section embedded within the HTTP section.

location /pdf/ {

sendfile on;

aio on;

}

location /audio/ {

directio 4m

directio_alignment 512

}sendfile: To utilize operating system resources, set the value of this directive to on. sendfile transfers data between file descriptors within the OS kernel space without sending it to the application buffers. This directive will be used to serve small files.

directio: This directive improves cache effectiveness by allowing read and write to be sent directly to the application. directio is a filesystem feature of every modern operating system. This directive will be used to serve larger files like videos.

aio: This directive enables multi-threading when set to on for write and read operation. Multi-threading is an execution model that allows multiple threads to execute separately from each other whilst sharing their hosting process resources.

directio_alignment: This directive assigns a block size value to the data transfer. It related to the directio directive.

Network layer

In this section, we will make use of directives such as tcp_nodelay and tcp_nopush to prevent small packets from waiting for a specified timeframe of about 200 milliseconds before they are sent at once.

Usually when packets are transferred in ‘pieces’, they tend to saturate the highly loaded network. So John Nagle built a buffering algorithm to resolve this issue. The purpose of Nagle’s buffering algorithm is to prevent small packets from saturating the highly loaded network.

Copy and paste the following code inside the HTTP section.

http {

tcp_nopush on;

tcp_nodelay on;

}tcp_nodelay: This directive, by default, is disabled to allow small packets to wait for a specified period before they are sent at once. To allow all data to be sent at once, this directive is enabled.

tcp_nopush: Because we have enabled tcp_nodelay directive, small packets are sent at once. However, if you still want to make use of John Nagle’s buffering algorithm, we can also enable the tcp_nopush to add packets to each other and send them all at once.

Buffers

Let’s take a look at how to configure request buffers in Nginx to handle requests effectively. A buffer is a temporary storage where data is kept for some time and processed.

You can copy the below in the server section.

server {

client_body_buffer_size 8k;

client_max_body_size 2m;

client_body_in_single_buffer on;

client_body_temp_pathtemp_files 1 2;

client_header_buffer_size 1m;

large_client_header_buffers 4 8k;

}It is important to understand what those buffer lines do.

client_body_buffer_size: This directive sets the buffer size for the request body. If you plan to run the webserver on 64-bit systems, you need to set the value to 16k. If you want to run the webserver on the 32-bit system, set the value to 8k.

client_max_body_size: If you intend to handle large file uploads, you need to set this directive to at least 2m or more. By default, it is set to 1m.

client_body_in_file_only: If you have disabled the directive client_body_buffer_size with the hashtag symbol # and this directive client_body_in_file_only is set, Nginx will then save request buffers to a temporary file. This is not recommended for a production environment.

client_body_in_single_buffer: Sometimes not all the request body is stored in a buffer. The rest of it is saved or written to a temporary file. However, if you intend to save or store the complete request buffer in a single buffer, you need to enable this directive.

client_header_buffer_size: You can use this directive to set or allocate a buffer for request headers. You can set this value to 1m.

large_client_header_buffers: This directive is used for setting the maximum number and size for reading large request headers. You can set the maximum number and buffer size to 4 and 8k precisely.

Compression

Compressing the amount of data transferred over the network is another way of ensuring that your web server performs better. In this section, we will make use of directives such as gzip, gzip_comp_level, and gzip_min_length to compress data.

Paste the following code inside the http section as shown below:

http {

gzip on;

gzip_comp_level 2;

gzip_min_length 1000;

gzip_types text/xml text/css;

gzip_http_version 1.1;

gzip_vary on;

gzip_disable "MSIE [4-6] .";

}gzip: If you want to enable compression, set the value of this directive to on. By default, it is disabled.

gzip_comp_level: You can make use of this directive to set the compression level. In order not to waste CPU resources, you need not set the compression level too high. Between 1 and 9, you can set the compression level to 2 or 3.

gzip_min_length: Set the minimum response length for compression via the content-length response header field. You can set it to more than 20 bytes.

gzip_types: This directive allows you to choose the response type you want to compress. By default, the response type text/html is always compressed. You can add other response type such as text/css as shown in the code above.

gzip_http_version: This directive allows you to choose the minimum HTTP version of a request for a compressed response. You can make use of the default value which is 1.1.

gzip_vary: When gzip directive is enabled, this directive add the header field Vary:Accept Encoding to the response.

gzip_disabled: Some browsers such as Internet Explorer 6 do not have support for gzip compression. This directive make use of User-Agent request header field to disable compression for certain browsers.

Caching

Leverage caching features to reduce the number of times to load the same data multiple times. Nginx provide features to cache static content metadata via open_file_cache directive.

You can place this directive inside the server, location and http section.

http {

open_file_cache max=1,000 inactive=30s;

open_file_cache_valid 30s;

open_file_cache_min_uses 4;

open_file_cache_errors on;

}open_file_cache: This directive is disabled by default. Enable it if you want implement caching in Nginx. This directive stores metadata of files and directories commonly requested by users.

open_file_cache_valid: This directive contains backup information inside the open_file_cache directive. You can use this directive to set a valid period usually in seconds after which the information related to files and directories is re-validated again.

open_file_cache_min_uses: Nginx usually clear information inside the open_file_cache directive after a period of inactivity based on the open_file_cache_min_uses. You can use this directive to set a minimum number of access to identify which files and directories are actively accessed.

open_file_cache_errors: You can make use of this directive to allow Nginx to cache errors such as “permission denied” or “can’t access this file” when files are accessed. So anytime a resource is accessed by a user who does not have the right to do so, Nginx displays the same error report “permission denied”.

Timeout

Configure timeout using directives such as keepalive_timeout and keepalive_requests to prevent long-waiting connections from wasting resources.

In the HTTP section, copy and paste the following code:

http {

keepalive_timeout 30s;

keepalive_requests 30;

send_timeout 30s;

}keepalive_timeout: Keep connections alive for about 30 seconds. The default is 75 seconds.

keepalive_requests: Configure a number of requests to keep alive for a specific period of time. You can set the number of requests to 20 or 30.

keepalive_disable: if you want to disable keepalive connection for a specific group of browsers, use this directive.

send_timeout: Set a timeout for transmitting data to the client.

Security Configuration for Nginx

The following solely focus on how to securely configure an Nginx instead of a web application. Thus we will not look at web-based attacks like SQL injection and so on.

In this section we will look at how to configure the following:

- Restrict access to files and directories

- Configure logs to monitor malicious activities

- Prevent DDoS

- Disable directory listing

Restrict access to files and directories

Let’s look at how to restrict access to sensitive files and directories via the following methods.

By making use of HTTP Authentication

We can restrict access to sensitive files or areas not meant for public viewing by prompting for authentication from users or even administrators. Run the following command to install a password file creation utility if you have not installed it.

apt-get install -y apache-utilsNext, create a password file and a user using the htpasswd tool as shown below. The htpasswd tool is provided by the apache2-utils utility.

sudo htpasswd -c /etc/apache2/ .htpasswd mikeYou can confirm if you have successfully created a user and random password via the following command

cat etc/apache2/ .htpasswdInside the location section, you can paste the following code to prompt users for authentication using the auth_basic directive.

location /admin {

basic_auth "Admin Area";

auth_basic_user_file /etc/apache2/ .htpasswd;

}By making use of the Allow directive

In addition to the basic_auth directive, we can make use of the allow directive to restrict access.

Inside the location section, you can use the following code to allow the specified IP addresses to access sensitive area.

location /admin {

allow 192.168.34.12;

allow 192.168.12.34;

}Configure logs to monitor malicious activities

In this section, we will configure error and access logs to specifically monitor valid and invalid requests. You can examine these logs to find out who logged in at a particular time, or which user accessed a particular file and so on.

error_log: Allows you to set up logging to a particular file such as syslog or stderr. You can also specify the level of error messages you want to log.

access_log: Allows to write users request to the file access.log

Inside the HTTP section, you can use the following.

http {

access_log logs/access.log combined;

error_log logs/warn.log warn;

}Prevent DDOS

You can protect the Nginx from a DDOS attack by the following methods:

Limiting users requests

You can make use of the limit_req_zone and limit_req directives to limit the rate of a request sent by users in minutes.

Add the following code in the location section embedded in the server section.

limit_req_zone $binary_remote_addr zone=one:10m rate=30r/m;

server {

location /admin.html {

limit_req zone=one;

}

}Limit the number of connections

You can make use of the limit_conn and limit_conn_zone directives to limit connection to certain locations or areas. For instance, the code below receives 15 connection from clients for a specific period.

The following code will go to the location section.

limit_conn_zone $binary_remote_addr zone=addr:10m;

server {

location /products/ {

limit_conn addr 10;

}

}Terminate slow connections

You can make use of timeouts directives such as the client_body_timeout and client_header_timeout to control how long Nginx will wait for writes from the client body and client header.

Add the following inside the server section.

server {

client_body_timeout 5s;

client_header_timeout 5s;

}It would be also a good idea to stop DDoS attacks at the edge by leveraging cloud-based solutions as mentioned here.

Disable directory listing

You can make use of the auto_index directive to prevent directory listing as shown in the code below. You need to set it to the value off to disable directory listing.

location / {

auto_index off;

}Conclusion

We have configured Nginx webserver to perform effectively and secure it from excessive abuse in a production environment. If you are using Nginx for Internet-facing web applications then you should also consider using a CDN and cloud-based security for better performance and security.